Run DeepSeek r1 locally in 3 steps and more ...

Spark Streaming Cheat Sheet, Notion's Data lake #Edition 16

What’s on the list today?

Run DeepSeek R1 locally

Deep Dive: Notion’s Data Lake Evolution

Spark Structured Streaming Cheatsheet

Run DeepSeek r1 Locally in 3 Steps

DeepSeek is the new AI player in town, and it's packing some serious heat. If you've given it a try, you know it's seriously smart.

Why rely on cloud services when you can run DeepSeek or any open-source AI model on your own machine? Here's how to bring AI power to your local setup.

Step 1 - Install Ollama - Ollama is a framework for running and managing large language models (LLMs) locally on your hardware.

Follow the installation guide depending on your OS - Ollama Github page

Step 2 - Check ollama page for available models and pull the model you would like to run in your terminal. For instance download the deepseek 7billion parameters model.

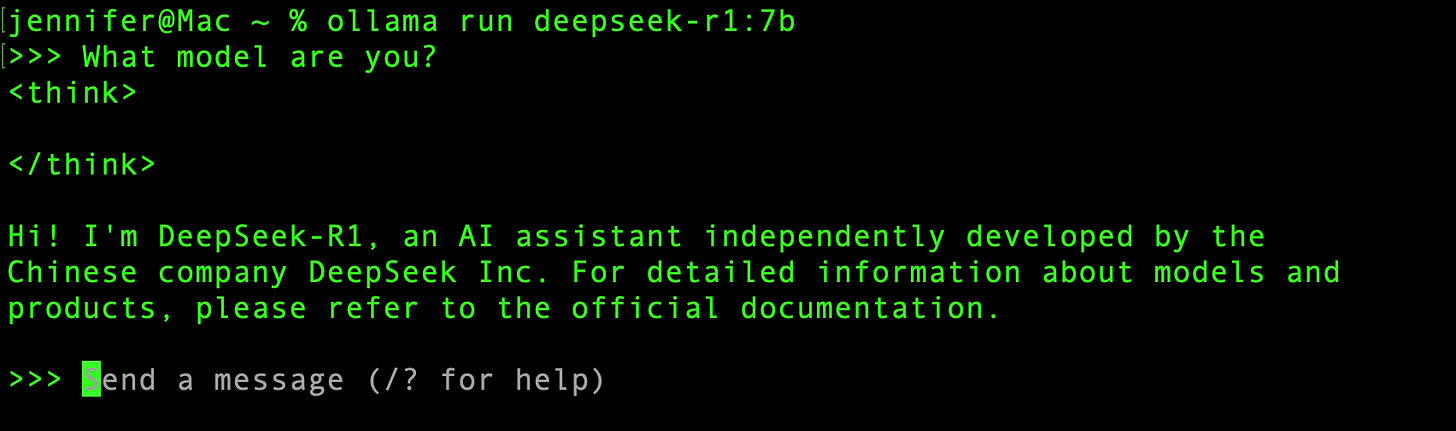

ollama run deepseek-r1:7bThis step will download the model and run it on your machine. Watch out for models that are huge in size, the deepseek-r1 671 billion parameters is over 400 GB.

Step 3 - Interact directly from terminal

No terminal time? No problem!

You can skip the command line and launch DeepSeek from a cooler, more graphical interface. Your eyes (and your fingers) will thank you!

Step 4 - Install AnythingLLM

Create a new workspace in AnythingLLM and configure settings to point to Ollama→deepseekr1:7b

Chat away!

Notion’s Data Lake Evolution

Notion, a powerful workspace tool, has become synonymous with productivity. But behind the scenes, the company has tackled the monumental task of scaling its data infrastructure to handle the enormous growth of user-generated content which has grown 10x in the last years. If you’re a Data Engineer, Notion’s journey in building a data lake is a goldmine of practical insights, valuable techniques, and tips that could transform how you approach your own data challenges.

Notions Flexible Data Model

Much like LEGO blocks everything you see in Notion is modeled as blocks and stored in Postgres with a consistent schema. This model enables users to customise how they organise and share information.

Each block has:

A unique ID.

Properties, which are custom attributes (e.g., a "title").

A Type that dictates how it is displayed.

Each block also has attributes defining relationships with other blocks

Content, an array of IDs pointing to nested blocks

Parent, an ID pointing to the parent block (used for permissions)

Before Data Lake

Notion's architecture was built around a sharded Postgres database before implementing a data lake. Key aspects of this architecture include:

Sharded Postgres Database:

Consisted of 96 physical instances

480 logical shards for data management

Data Handling:

Received rapidly increasing data, doubling every 6-12 months

ELT (Extract, Load, Transform) Pipeline:

Used Fivetran to transfer data to Snowflake

Extracted data from Postgres Write Ahead Log (WAL) in 480 hourly-run connectors

Merged individual tables in Snowflake for analytics and machine learning

Challenges with Notion's Pre-Data Lake Architecture

Operational Overhead: 480 Fivetran connectors resulted in a significant burden.

Slow Data Ingestion: Update-heavy workload made data transfer to Snowflake costly and time-consuming (90% updates).

Complex Transformations: Standard SQL capabilities were insufficient for data transformations.

Resource-Intensive Processing: Generating denormalized views for features like AI and Search required costly ancestor tree traversals.

Data Lake Architecture

Notion transformed its architecture to enable real-time, scalable data processing and storage. Key improvements include:

Cost Optimization:

Shifted critical datasets to a cost-efficient data lake on Amazon S3, reducing storage costs while maintaining scalability

Real-Time Ingestion:

Adopted CDC (Change Data Capture) with Debezium, streaming live changes to Kafka

Eliminated batch processing delays and enabled real-time updates

Efficient Data Storage:

Implemented Apache Hudi for optimized data storage and incremental snapshots

Migrated to cost-effective data storage on Amazon S3, supported by Hudi

Scalable Processing:

Switched to Apache Spark for distributed processing, handling large-scale data transformations in parallel

Enabled faster data insights and avoided performance bottlenecks

Hybrid Data Ingestion:

Adopted a hybrid approach, combining incremental updates with periodic full snapshots

Ensured data freshness, completeness, and consistency across systems

Key Takeaways for Data Engineers:

• Adopt Change Data Capture (CDC) for real-time updates.

• Leverage Apache Hudi for efficient data storage management.

• Use Apache Spark for scalable data processing.

• Implement a hybrid data ingestion strategy for consistent data accuracy.

• Explore data lakes (e.g., Amazon S3) for cost-effective, scalable storage solutions.

For more detailed architecture view visit Notion's Blog

Spark Structured Streaming Cheatsheet

Spark Structured Streaming is a scalable, fault-tolerant stream processing engine that enables you to process real-time data streams using the same high-level API as batch processing.

Spark Structured Streaming is a common design pattern for incrementally loading data from Delta tables. To assist with this, we have compiled a cheatsheet that outlines all available options.

For the extension of LLM running locally open-webui is an opensource chat user interface. Nice project; https://github.com/open-webui/open-webui